Multi-class classification to identify visual instructions to the autonomous vehicle.

In farming, robots are being used more and more to help with tasks like moving tools or following farmers through fields. To make these robots easier to control, we use AI to recognize simple visual instructions, such as hand signals. This project uses multi-class classification to train the robot to understand different gestures, so it can respond quickly and work more naturally alongside people in the field.

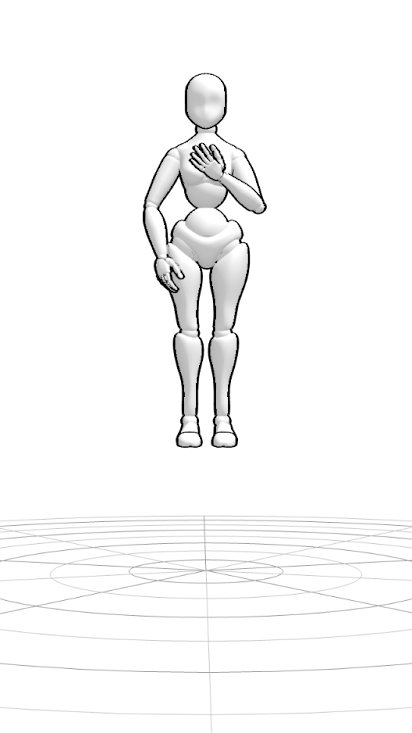

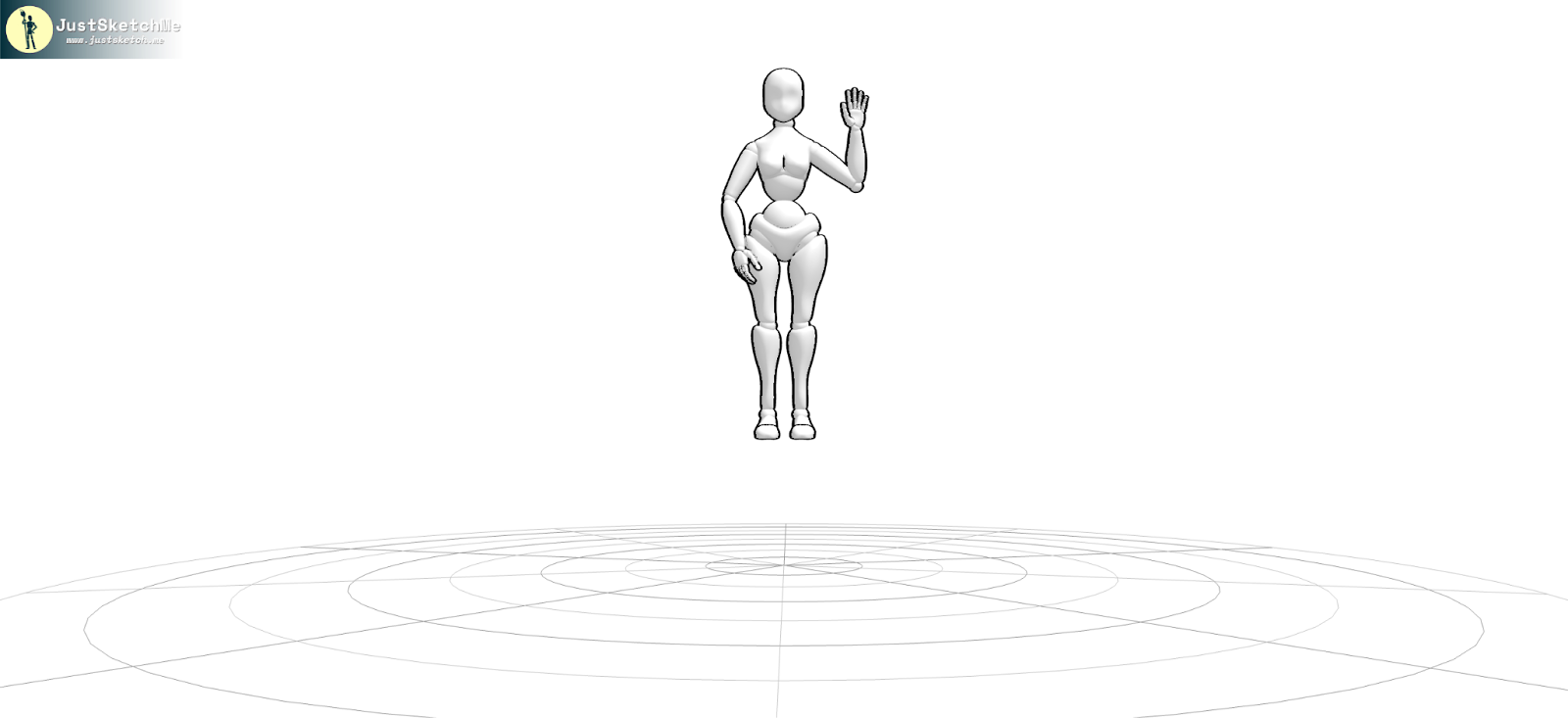

These poses serve as clear visual commands, allowing the robot to understand specific instructions in real time. A multi-class image classification model runs on a live video stream onboard the autonomous vehicle to interpret these gestures as they happen.

Live ML demo